Never rush in: Always think the whole process through!

For around the last five years, the technical communications sector has been focussing more and more of its efforts on a new form of information sharing: content delivery applications.

Content delivery is changing

A new term for these systems has even been created, namely ‘content delivery portals’ or ‘CDP’. This overarching term covers all portal-like applications that will realise a much sought-after aim – to share content more dynamically and in a way that is tailored to the specific situation and use case. In other words, users will only see, hear and read what they really need to.

Many steps have been taken in this direction before, e.g with mobile documentation. However these efforts have focussed more on online tools and apps, sometimes with multimedia content. What is really exciting is the possibility of using everything that you have worked so hard to compile in your authoring processes – sometimes over many years and with many attempts until you’ve got it just right – namely your metadata with taxonomies and hierarchies, which until now were primarily used for automated document creation. In a CDP, this metadata opens up completely different mechanisms for faceted searches, connecting content, as well as automated data retrieval and display options. And this in turn means the content can be used for the latest trends, be it the Internet of Things, Industry 4.0 or whatever the next big development is called.

But are we not forgetting something?

In all our excitement about the new opportunities for our systems and processes, it is important to recognise how easily we can overlook certain other considerations. Indeed we might never have given them enough thought.

When expanding our information logistics with new content and systems, what is the situation in terms of security i.e. storing the content and documents we create? We need genuinely secure, certified storage solutions that are in line with legal requirements and can be utilised over a very very long period. Long enough to fulfil the storage periods set out by different industries, but also beyond that, so that if, in 30 years time, our system is compromised by illegal activity, we are able to quickly identify which documents have been ‘released’ – with complete traceability time and time again.

Authoring systems enable us to create and manage our content. They are smart and efficient – of that there is no doubt. However, the documents created across a wide range of different media are not currently archived as they should be. In some projects, the systems interact with other business systems such as SAP. However, it is rare to encounter a complete package of genuinely certifiable processes including secure transfer mechanisms and specialist long-term archiving.

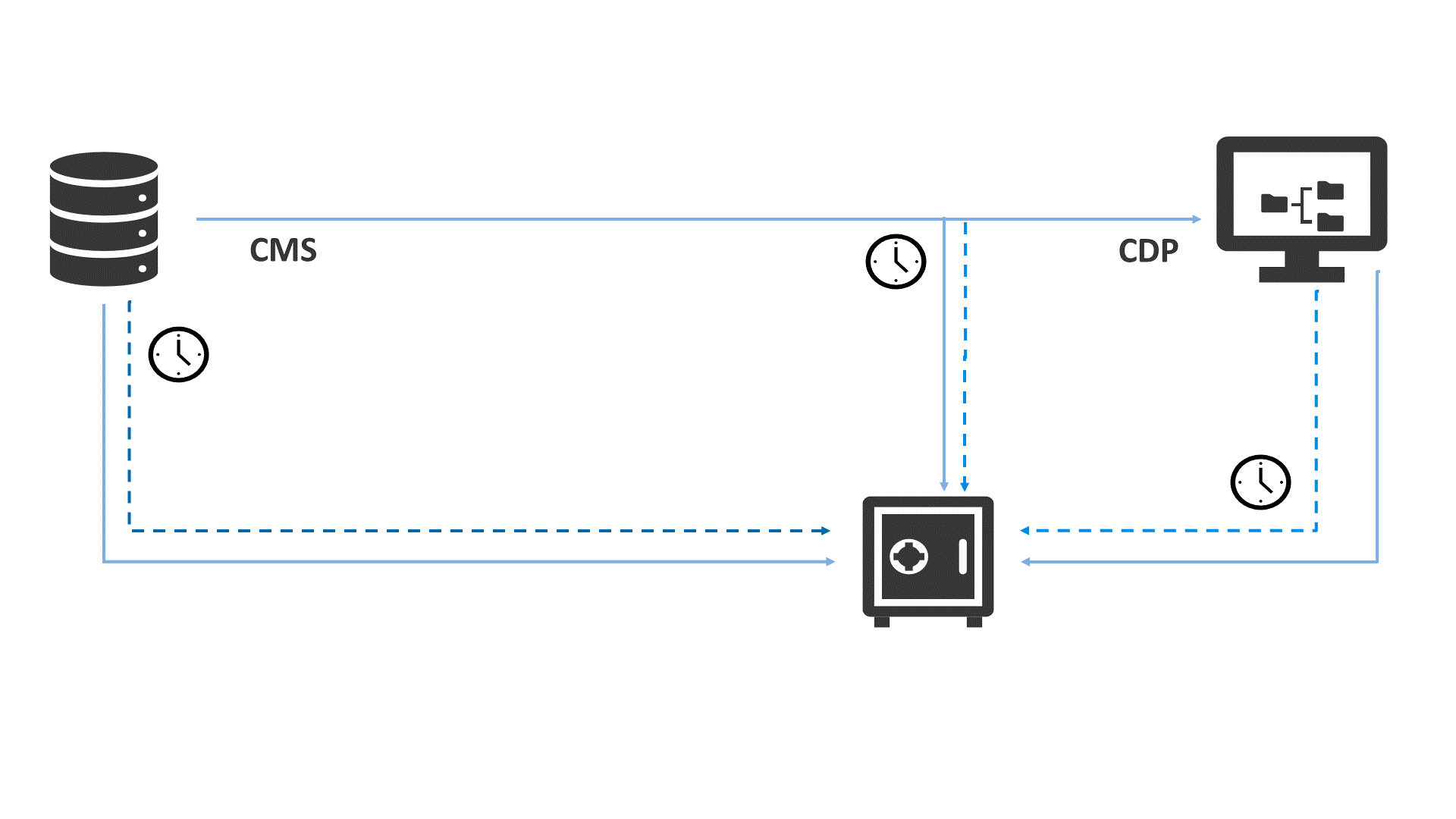

Now we have a solution: System manufacturers such as SCHEMA have recently started offering standard interfaces and system/process integration with the requisite archiving systems and concepts. SafeArch® is one such standard concept: It is process-driven and ensures that content is transferred securely from the CMS to the requisite archiving system.

This guarantees that every publication that is ‘released’ can also be found, in exactly the same version, in the archive and that all processes are traceable in line with statutory requirements. It’s a big step in the right direction!

The future is dynamic

What about the future trend towards dynamic content delivery? And the move away from static documents in PDF format? SafeArch® will also enable CMS and CDP producers like SCHEMA to keep pace with this trend. The requirements will simply need to be taken one step further.

Content that is transferred to a CDP must, of course, still be archived, just in different formats and packages than before. It will still need to be accessible and that’s precisely where concepts such as SafeArch® will be needed to ensure traceability.

The next step in terms of traceability is to ensure that subsequent processes are tracked or confirmed by users. For instance data on users accessing or downloading information or documents could, in turn, also be made available via a CDP. In future, this requirement may even form part of the contract between parties.

Data about what users have searched and read, as well as what content has been created, can all be logged based on web-requests. Indeed this process data is being archived more and more often as evidence of what users have actually been doing.

In fact, it’s quite logical that in the case of dynamic content, the process data and archiving of this data should also be dynamic. Don’t you think? Ultimately this means that the processes have been thoroughly thought through.

About Prof. Wolfgang Ziegler

Wolfgang Ziegler is a theoretical physicist, who has been working at the Karlsruhe University of Applied Sciences since 2003. He researches and teaches around the world on the subject of information and content management. He has also been providing business consultancy services through the Institute for Information and Content Management in the area of intelligent information logistics for over 20 years. He is a renowned CMS and CDP expert in the areas of PIÂ classification, the REx method for measuring KPIs, delivery concepts such as CoReAn (CDP analytics) and microDocs.